This post is the second in a series about how to implement legal AI that knows your law firm. In the series, we cover the differences between LLMs and search, the elements that make a good search engine, the building blocks of agentic systems (e.g. RAG), and how to implement a system that is fully scalable, secure, and respects your firm’s unique policies and practices.

Large Language Models (LLMs) are not search engines. As we discussed in the first post of this series, LLMs are trained on large datasets to generate text in response to prompts, doing so based on patterns identified in the training data. If the answer you need is embedded in your firm’s proprietary data and previous work product, an LLM alone cannot access or present that answer in its response, even though it might produce a credible-sounding answer based on the data it has been trained on.

This is where Retrieval Augmented Generation (RAG) comes in. RAG is a technique that focuses an LLM on specific content accessed through a search engine. It enhances the accuracy and dependability of generative AI models by incorporating factual information from sources outside the LLM’s training dataset.

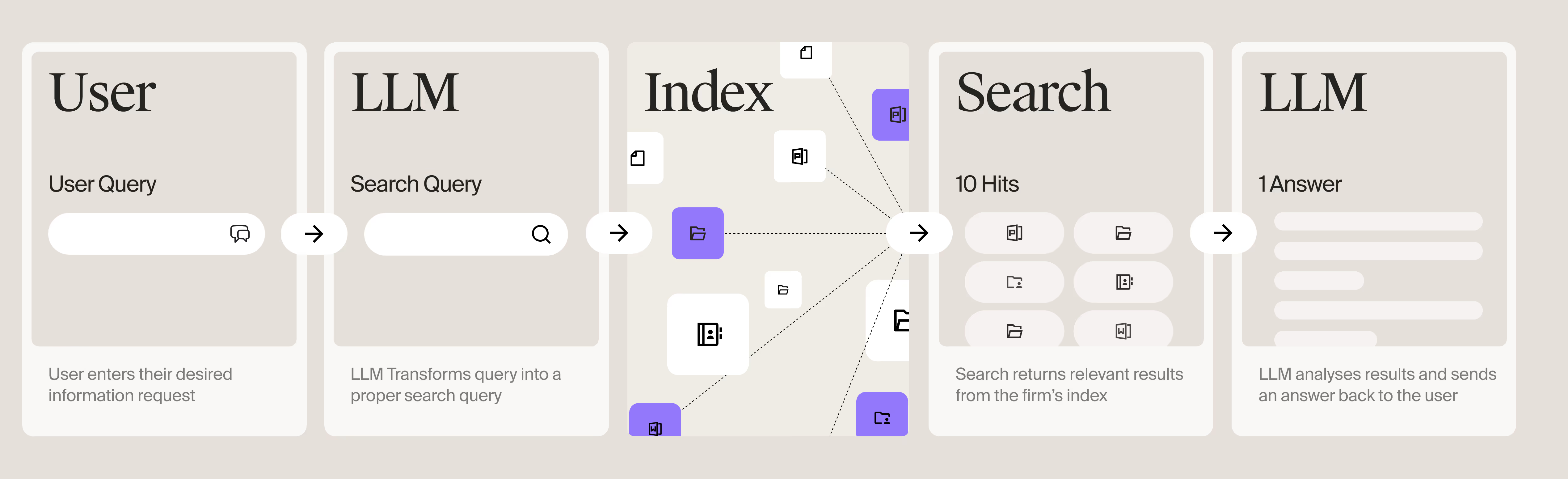

This is how a typical RAG flow goes:

- The user enters their desired information request. For example, "Does AlphaBetaGamma Inc. have any merger deals that include earn-out provisions based on performance?"

- This query is sent to the LLM, which, using its natural language abilities, transforms it into a proper search query (for example, "AlphaBetaGamma Inc. merger earn-out based on performance milestones").

- This search query is then sent to the search engine, which returns relevant results from the firm’s search index. In this case, the search engine would return search results pointing to various earn-out sections of the desired company's merger documents that are in the firm's data. If any of them mention performance milestones, their relevance would be highest, and they would appear at the top of the results.

- These search results are then sent back to the LLM, along with a prompt to analyze them carefully and use them to answer the user's question.

This basic “user -> LLM -> search engine -> LLM -> user” flow enables a "conversational" search experience. Note that it is enabled by the connection between the LLM and the search engine, and that these two systems serve distinct roles. Even if the best LLM in the world is used, the RAG system can still perform poorly if a subpar search engine supplies it with irrelevant data (i.e., the "garbage in, garbage out" principle applies here). Therefore, any AI platform with RAG capabilities is only as good as the underlying search system.

Most firms would benefit from implementing an accurate, reliable, and comprehensive enterprise search engine that returns relevant, real-time data as the first step. Unfortunately, legal professionals have become accustomed to lackluster search technology that hasn’t been fully scalable or fit for purpose. The next blog post in this series begins to discuss why this is the case and how firms can improve upon it.

To wrap up, effective RAG systems resolve ambiguity, clearly cite trusted data sources, and minimize hallucinations by accessing factual data sources. A firm doesn’t need to train its own LLM, but it does require the smart orchestration between search and LLM that RAG makes possible. In the end, it’s about maximizing the benefits of GenAI without compromising the end-user experience.

Explore the blog series “Legal AI That Knows Your Firm”

Posts in this series:

- The Allure (and Danger) of Using Standalone LLMs for Search

- Why Retrieval Augmented Generation (RAG) Matters (This one)

- All Search Engines Are Not Created Equal

- Why Good Legal Search is Informed by the Entire Context of Your Institutional Knowledge—Not Siloed or “Federated”

- How Can Your AI Securely Use All of Your Firm’s Data?

- Why an “Always On” Search Engine is a Prerequisite for Scalable AI Adoption

- Building AI Agents That Are Informed by Your Real-World Legal Processes

- As the Variety of Tasks Automated by AI Agents Proliferate, How Does a Firm Manage It All?

- How Do I Adapt Workflow Agents to the Specific Needs of My Firm?

- Does Your AI Platform Set Your Firm Apart from the Competition?

This post was adapted from our 30-page white paper entitled "Implementing AI That Knows Your Firm: A Practical Guide." Download your copy below.